Avoiding shortcut solutions in artificial intelligence

You might be able to get to your destination quicker if your Uber driver uses a shortcut. However, if the machine learning model uses a shortcut to get you there faster, it could fail unexpectedly.

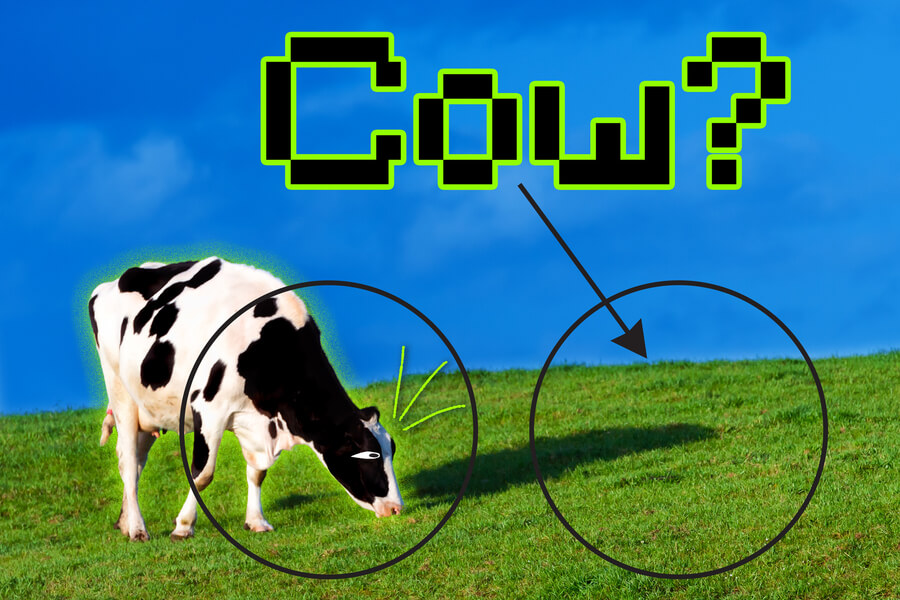

A shortcut solution in machine learning is when the model uses a single characteristic of a dataset instead of learning the full meaning of the data. This can lead to incorrect predictions. A model might be able to recognize cow images by looking at the green grass in photos rather than the complex patterns and shapes of the cows.

Researchers at MIT have just published a new study that examines the issue of shortcuts in machine-learning methods. They propose a solution to prevent these shortcuts by forcing models to use more data when making decisions.

Researchers force the model to consider more complex data features that it hasn’t considered by removing its focus from the simple characteristics. They ask the model to solve the same task twice, once using the simpler features and then again using the more complex features it has learned to recognize. This reduces the tendency to find shortcuts and improves the model’s performance.

This work could be used to improve the performance of machine learning models that detect disease in medical images. This could result in false diagnosis and potentially dangerous consequences for patients.

It is difficult to understand why deep networks make certain decisions, and more specifically, what parts of data they choose to concentrate on when making a decision. Joshua Robinson, a doctoral student at the Computer Science and Artificial Intelligence Laboratory, and the lead author of this paper, said that we can better understand shortcuts and answer many of the very important but fundamental questions that are crucial to those who want to deploy these networks.

Robinson co-authored the paper with Suvrit Sharma, the senior author and Esther and Harold E. Edgerton Career Developer Associate Professor in the Department of Electrical Engineering and Computer Science. She is also a core member of IDSS and the Institute for Data, Systems and Society. Stefanie Jegelka, X-Consortium Career development Associate Professor in EECS and a member of CSAIL and IDSS. Kayhan Batmanghelich, University of Pittsburgh assistant, and Ke Yu, PhD students Li Sun and Ke Yu, and Li Sun and Ke Yu, as well as University of Pittsburgh Assistant Professor Kayhan Batmanghelich and Ke Yu, as well as well as Ke Yu and Ke Yu, as well as Ke Yu and Li Sun and Ke Yu, as well as University of The paper will be presented at December’s Conference on Neural Information Processing Systems.

Long road to understand shortcuts

Contrastive learning was the focus of the researchers’ study. This is a powerful type of self-supervised machine intelligence. Self-supervised machine learning is when a model is trained with raw data that does not include label descriptions from humans. This allows it to be used for larger amounts of data.

Self-supervised learning models learn useful representations of data that can be used for various tasks like image classification. These tasks will not be able to work if the model makes shortcuts or fails to capture critical information.

If a self-supervised learning model is trained in order to classify pneumonia using X-rays taken from several hospitals, but learns to make predictions based upon a tag that identifies which hospital it came from (because there are more cases of pneumonia than others), then the model will not perform well when it is given new data.

An encoder algorithm is used to distinguish between inputs that are similar and inputs that are dissimilar in contrastive learning models. This encodes complex and rich data, such as images, so that the contrastive model can interpret them.

Researchers tested contrastive learning encoders using a series images. They also found that they fall for shortcut solutions during the training process. To determine which input pairs are similar or dissimilar, encoders focus on the most basic features of an image. Jegelka states that the encoder should be able to focus on all of the useful characteristics of data when making a decision.

The team found it difficult to distinguish between similar and dissimilar pairs and determined that this change changes the features that the encoder will consider to make a decision.

She says, “If you make it harder to distinguish between similar and different items, your system will be forced to learn more meaningful data in the data because it can’t solve the task without learning that it cannot.”

However, increasing the difficulty led to a tradeoff: the encoder became less adept at focusing its attention on certain features but did better with others. Robinson said that it almost forgot the more complex features.

The researchers requested that the encoder discriminate between pairs in the same way as it did originally using simpler features and after researchers had removed any information it had learned. This was to avoid this compromise. The encoder was able to solve the task simultaneously in both ways, which allowed it to gain an overall improvement across all features.

Implicit feature modification is a method that adaptively modifies samples to remove simpler features used by the encoder to distinguish between pairs. Sra explained that the technique doesn’t require human input. This is crucial because real-world data can contain hundreds of features that could be combined in complicated ways.

From cars and COPD

One test was conducted by the researchers using images of cars. To make it more difficult for the encoders to distinguish between similar and different images, they used implicit feature modification. The encoder was able to improve its accuracy for all three features simultaneously — texture, shape and color.

The researchers tested the method with more complicated data to see if it would work well with complex data. They also used samples from a medical database for chronic obstructive lung disease (COPD) to test the method. The method showed simultaneous improvements across all the features it evaluated.

This work is a significant step forward in understanding and solving the causes of shortcuts. However, the researchers believe that future advances will depend on continuing to improve these methods and applying them for other types of self-supervised learning.

Robinson states that this ties in with some of the most important questions about deep learning systems like “Why do they fail?” and “Can we predict the outcomes of your model’s failure?”