A tiny machine-learning design reduces memory consumption on internet-of–things devices

Researchers can use machine learning to predict and identify patterns and behaviors and to learn, optimize and perform tasks. These include applications such as vision systems for autonomous vehicles and social robots, smart thermostats, and wearable and mobile devices such as smartwatches that monitor health. These algorithms and their architectures have become more powerful and efficient. However, they require a lot of memory, computation, data, and data to train them and make inferences.

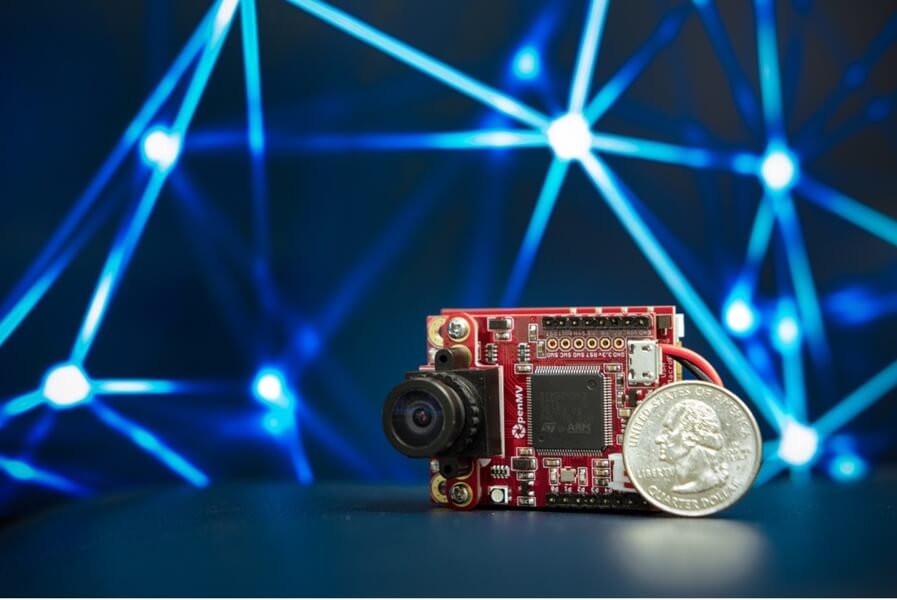

Researchers are also working to reduce the complexity and size of the devices these algorithms can run on. This includes a microcontroller (MCU), which is found in billions of internet of things (IoT). A MCU is a memory-limited minicomputer that runs simple commands in a compact integrated circuit. It lacks an operating system. These inexpensive edge devices are low-power, cost-effective, and have a limited bandwidth. This makes it easy to inject AI technology into them to increase their utility, privacy, and make their use more democratic — TinyML.

A TinyML team from MIT and Song Han’s research group, both assistant professors in the Department of Electrical Engineering and Computer Science, have created a method to reduce the memory required and improve image recognition in live video.

Han, who is responsible for TinyML software development and hardware, says that “our new technique can do much more” and pave the way to tiny machine learning on edge device.

Han and his colleagues at EECS and MIT-IBM Watson AI Lab analysed how memory is used by microcontrollers that run various convolutional neural network (CNNs) to increase TinyML’s efficiency. CNNs are biologically-inspired models after neurons in the brain and are often applied to evaluate and identify visual features within imagery, like a person walking through a video frame. Their study revealed an imbalance in memory usage, which caused front-loading of the computer chip and created a bottleneck. The team developed a new neural architecture and inference technique to solve the problem. Peak memory usage was reduced by between four and eight times. The team also deployed the new inference technique and neural architecture on their tinyML vision system equipped with a camera capable of object and human detection. This created MCUNetV2. MCUNetV2 was able to outperform other machine learning methods that were running on microcontrollers. It has high detection accuracy, which opens up the door to new vision applications.

A design to improve memory efficiency and redistribution

TinyML has many advantages over deep machine-learning that takes place on larger devices like smartphones and remote servers. Han points out that TinyML offers privacy as data are not sent to the cloud but instead processed locally on the device. It also provides robustness and low latency. And it is affordable at around $1 to $2. Additionally, larger AI models that are more traditional can produce as much carbon as five cars over their lifetimes and require many GPUs. They also cost billions to train. Han says that TinyML technologies can help us go off-grid and save carbon emissions, make AI smarter, more efficient, faster, and more accessible to all — to democratize AI.

However, MCU memory is small and digital storage is limited. This makes AI applications difficult to use. Efficiency is therefore a major challenge. MCUs have only 256 kilobytes and 1 megabyte respectively of storage. Mobile AI on smartphones and cloud computing may, however, have 256 gigabytes or terabytes, 1 megabyte of storage, and possibly 16,000 to 100,000 times as much memory. Lin and Chen said that the precious resource was important to the team. They wanted to optimize its usage so they profiled MCU memory usage in CNN designs.

The first five convolutional blocks of the 17-block range were the most memory-intensive. Each block has many connected convolutional layers that help filter for specific features in an input image or video. This creates a feature map which is the output. The initial memory-intensive stage saw most blocks exceed the 256KB memory limit. There was plenty of scope for improvement. Researchers devised a patch-based inference program that operates only on a fraction of the layer’s feature maps at once. This allows for a reduction in peak memory. The new method saves four to eight times as much memory as the layer-by-layer computational approach, and does not require any latency.

Let’s say you have a pizza. You can cut it into four pieces and only eat one at a time. This saves you about three-quarters. Han explains that this is the patch-based method of inference. Han says, “But, this wasn’t a free lunch.” Photoreceptors can only see a portion of an image at once, so this receptive area is a small patch of the entire image. The researchers discovered that about 10% of the receptive fields, or pizza slices, are becoming larger. Researchers suggested that the neural network could be redistributed across blocks using the patch-based method. This would preserve the accuracy of the vision system. The question was still open about which blocks required the patch-based method and which could be used the original layer-by–layer one. Hand-tuning all these knobs is labor-intensive and should be left to AI.

Lin says, “We want this to be automated by doing a joint automatic search for optimization, which includes both the neural network structure, number and channels, as well as the kernel size and inference schedule, including number and number of patches and patch-based inference and other optimization knobs.” Lin adds, “so that nonmachine learning specialists can have a push button solution to increase the computation efficiency and improve engineering productivity, in order to be able deploy this neural network onto microcontrollers.”

A new future for tiny vision systems

Co-designing the network architecture with the neural search optimization and inference schedule provided significant gains. It was adopted into MCUNetV2 and outperformed all other vision systems in peak memory usage and image and object classification. MCUNetV2 has a small screen and a camera. It is approximately the same size as an earbud case. Chen says that the new version required four times as much memory to achieve the same accuracy as the original version. MCUNetV2 outperformed other tinyML solutions by detecting objects in images frames. This included human faces. It also set a new record of accuracy at almost 72 percent for image classifications using the ImageNet dataset. This was achieved with 465KB memory. Researchers tested the MCU vision model’s ability to identify the presence or absence of people within images using visual wake words. It was able to do this even though it had only 30KB of memory. This beat the previous state of the art method by more than 90%. The method is reliable enough to be used in smart-home applications.

MCUNetV2’s high accuracy, low energy consumption and cost unlock new IoT applications. Han says that vision systems on IoT devices are limited in memory and were once thought to only be good at basic image classification tasks. However, their work has allowed TinyML to flourish. The research team also envisions it in many fields, including monitoring sleep and joint movements in the health-care sector to sports coaching and movements such as a golf swing to plant recognition in agriculture.

Han says, “We really push for these larger-scale and real-world applications.” Our technique does not require any GPUs or specialized hardware. It can be run on small, inexpensive IoT devices. This allows us to perform real-world applications such as person detection, face mask detection and visual wake words. This opens up the possibility of a new way to do tiny AI and mobile visuals.